南方医科大学学报 ›› 2024, Vol. 44 ›› Issue (5): 950-959.doi: 10.12122/j.issn.1673-4254.2024.05.17

汪辰1,2( ), 蒙铭强1,2, 李明强2, 王永波1,2, 曾栋1,2, 边兆英1,2, 马建华1,2(

), 蒙铭强1,2, 李明强2, 王永波1,2, 曾栋1,2, 边兆英1,2, 马建华1,2( )

)

收稿日期:2023-10-31

出版日期:2024-05-20

发布日期:2024-06-06

通讯作者:

马建华

E-mail:wangchen9909@outlook.com;jhma@smu.edu.cn

作者简介:汪 辰,在读硕士研究生,E-mail: wangchen9909@outlook.com

基金资助:

Chen WANG1,2( ), Mingqiang MENG1,2, Mingqiang LI2, Yongbo WANG1,2, Dong ZENG1,2, Zhaoying BIAN1,2, Jianhua MA1,2(

), Mingqiang MENG1,2, Mingqiang LI2, Yongbo WANG1,2, Dong ZENG1,2, Zhaoying BIAN1,2, Jianhua MA1,2( )

)

Received:2023-10-31

Online:2024-05-20

Published:2024-06-06

Contact:

Jianhua MA

E-mail:wangchen9909@outlook.com;jhma@smu.edu.cn

Supported by:摘要:

目的 为解决CT扫描视野(FOV)不足导致的截断伪影和图像结构失真问题,本文提出了一种基于投影和图像双域Transformer耦合特征学习的CT截断数据重建模型(DDTrans)。 方法 基于Transformer网络分别构建投影域和图像域恢复模型,利用Transformer注意力模块的远距离依赖建模能力捕捉全局结构特征来恢复投影数据信息,增强重建图像。在投影域和图像域网络之间构建可微Radon反投影算子层,使得DDTrans能够进行端到端训练。此外,引入投影一致性损失来约束图像前投影结果,进一步提升图像重建的准确性。 结果 Mayo仿真数据实验结果表明,在部分截断和内扫描两种截断情况下,本文方法DDTrans在去除FOV边缘的截断伪影和恢复FOV外部信息等方面效果均优于对比算法。 结论 DDTrans模型可以有效去除CT截断伪影,确保FOV内数据的精确重建,同时实现FOV外部数据的近似重建。

汪辰, 蒙铭强, 李明强, 王永波, 曾栋, 边兆英, 马建华. 基于双域Transformer耦合特征学习的CT截断数据重建模型[J]. 南方医科大学学报, 2024, 44(5): 950-959.

Chen WANG, Mingqiang MENG, Mingqiang LI, Yongbo WANG, Dong ZENG, Zhaoying BIAN, Jianhua MA. Reconstruction from CT truncated data based on dual-domain transformer coupled feature learning[J]. Journal of Southern Medical University, 2024, 44(5): 950-959.

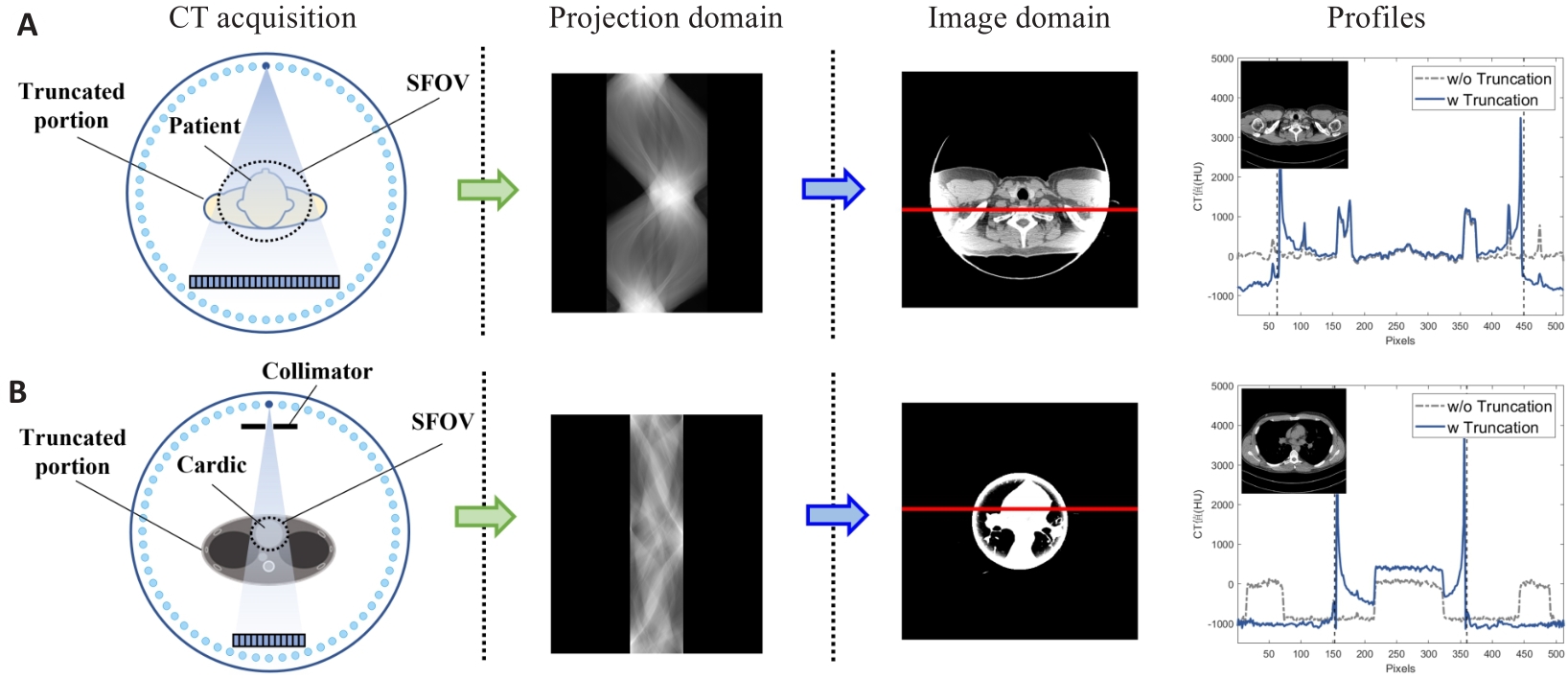

图1 截断伪影示意图

Fig.1 Illustration of truncation artifacts of the projection data collected under two truncation conditions, the reconstructed images and profiles comparison with the non-truncated image at the position marked by red solid line (the vertical dashed lines represent the truncation boundaries). A: Partial truncation at the patient's shoulder. B: Cardiac interior scanning.

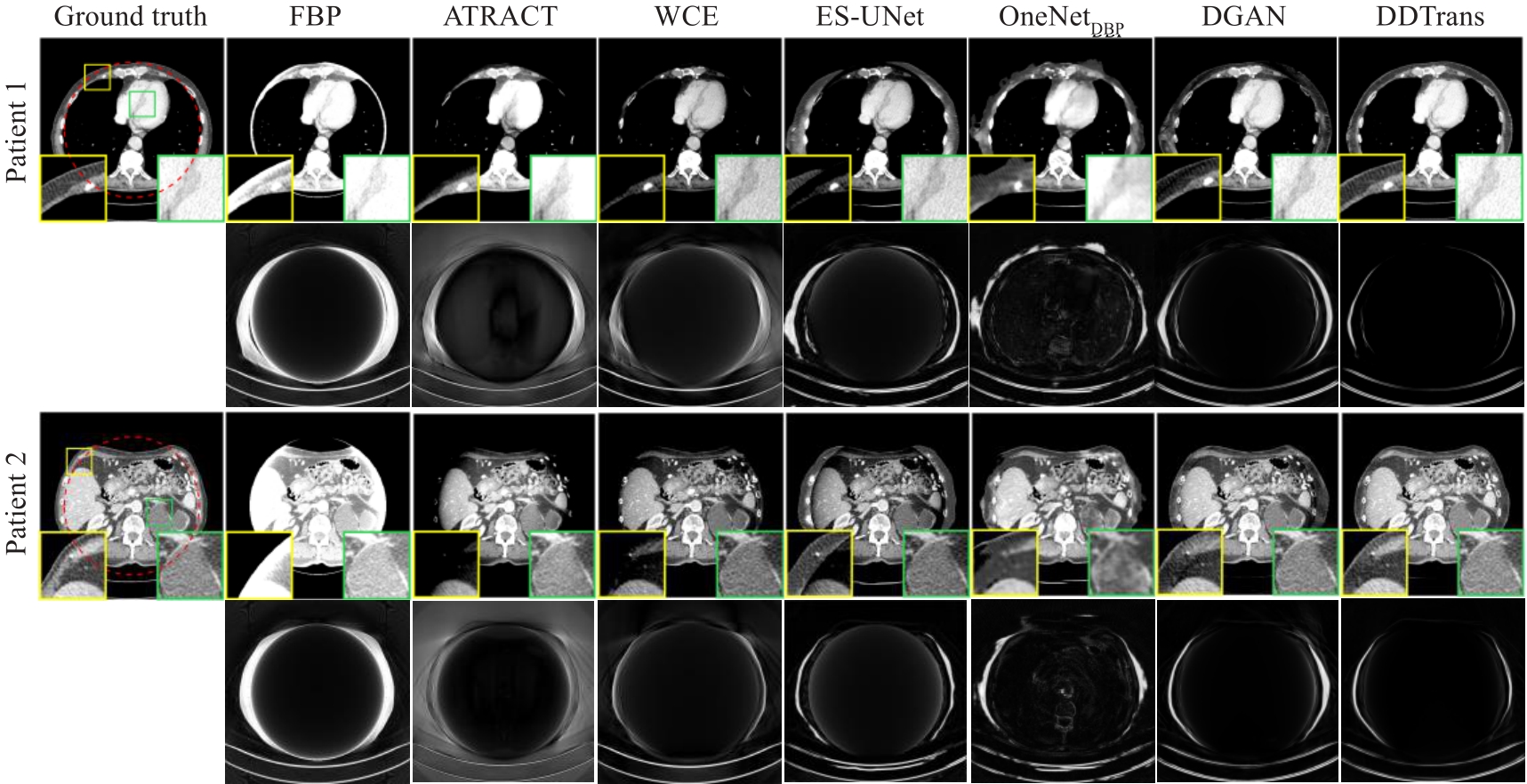

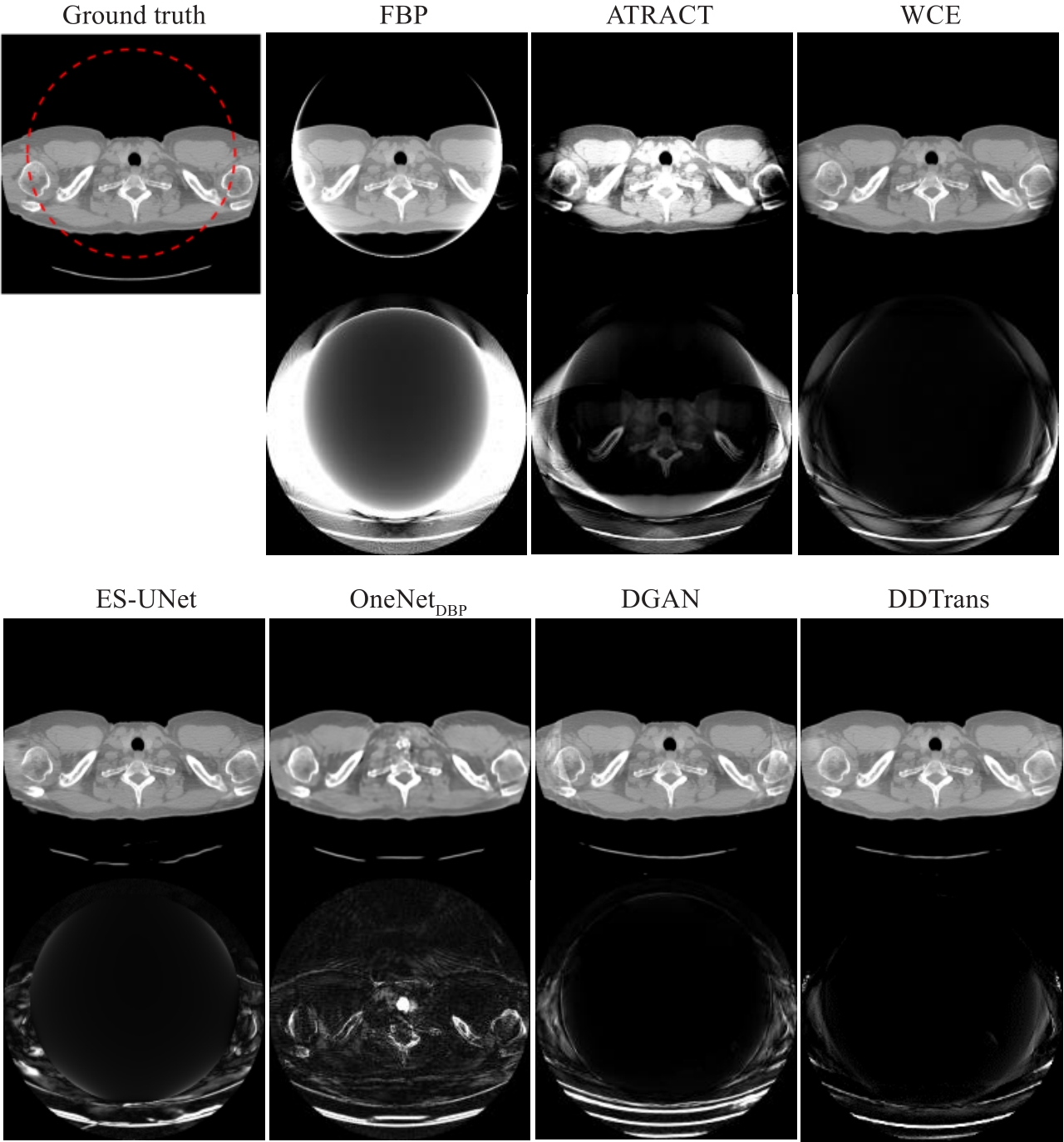

图3 部分截断情况下对比实验的截断伪影校正结果

Fig.3 Comparison of truncation artifacts correction results with different methods for partial truncation data. The display window is [-200, 200]HU. The second and fourth rows are absolute residual images of different algorithm results with respect to the ground truth.

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| FBP | 189.0240 | 269.5267 | 0.9134 | 0.5618 | 18.9133 | 4.3666 |

| ATRACT | 62.8714 | 234.9390 | 0.9290 | 0.6436 | 29.4219 | 7.0502 |

| WCE | 26.1827 | 152.8316 | 0.9628 | 0.7531 | 38.0734 | 9.5631 |

| ES-UNet | 40.6024 | 155.7068 | 0.9458 | 0.8858 | 33.2551 | 9.2289 |

| OneNetDBP | 61.3758 | 158.5035 | 0.8821 | 0.8530 | 27.1814 | 9.2492 |

| DGAN | 22.0072 | 138.6643 | 0.9837 | 0.8917 | 41.8713 | 10.3352 |

| DDTrans | 5.4097 | 116.9005 | 0.9991 | 0.9250 | 52.4910 | 12.0010 |

表1 部分截断情况下对比实验的定量结果

Tab.1 Comparison of quantitative results of different methods for partial truncation data

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| FBP | 189.0240 | 269.5267 | 0.9134 | 0.5618 | 18.9133 | 4.3666 |

| ATRACT | 62.8714 | 234.9390 | 0.9290 | 0.6436 | 29.4219 | 7.0502 |

| WCE | 26.1827 | 152.8316 | 0.9628 | 0.7531 | 38.0734 | 9.5631 |

| ES-UNet | 40.6024 | 155.7068 | 0.9458 | 0.8858 | 33.2551 | 9.2289 |

| OneNetDBP | 61.3758 | 158.5035 | 0.8821 | 0.8530 | 27.1814 | 9.2492 |

| DGAN | 22.0072 | 138.6643 | 0.9837 | 0.8917 | 41.8713 | 10.3352 |

| DDTrans | 5.4097 | 116.9005 | 0.9991 | 0.9250 | 52.4910 | 12.0010 |

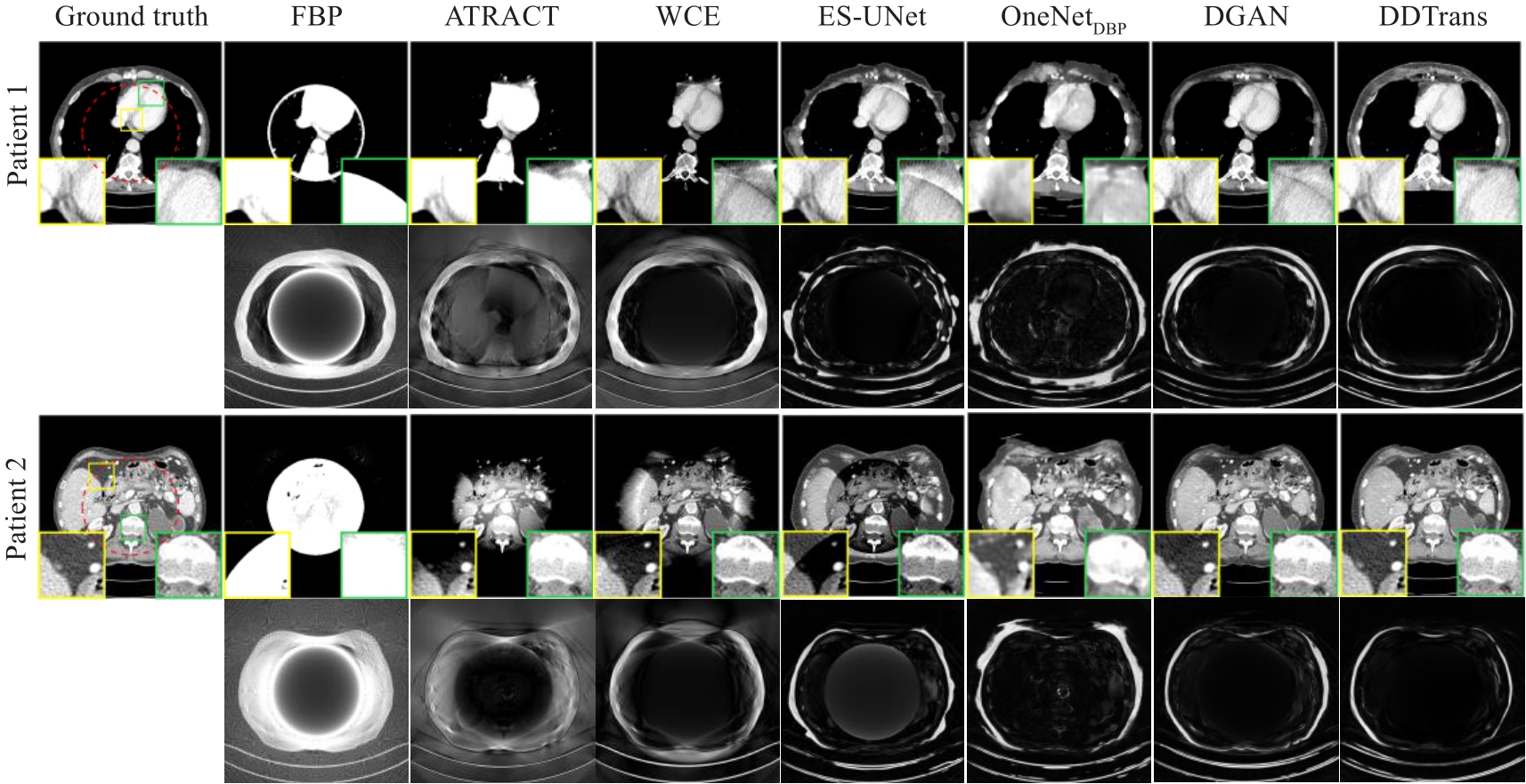

图5 内扫描情况下对比实验的截断伪影校正结果

Fig.5 Comparison of truncation artifacts correction results with different methods for interior scanning. The display window is [-200, 200]HU. The second and fourth rows are absolute residual images of different algorithm results with respect to the ground truth.

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| FBP | 488.4375 | 556.6830 | 0.6466 | 0.2275 | 7.5358 | 7.0234 |

| ATRACT | 62.3716 | 287.6859 | 0.8911 | 0.5542 | 25.8800 | 13.1452 |

| WCE | 37.0367 | 225.5691 | 0.8819 | 0.5915 | 32.9671 | 14.9137 |

| ES-UNet | 54.7751 | 226.6859 | 0.8906 | 0.8062 | 29.2464 | 14.8481 |

| OneNetDBP | 48.7271 | 219.1173 | 0.8279 | 0.7781 | 26.1069 | 15.2767 |

| DGAN | 29.6017 | 201.8265 | 0.9537 | 0.7769 | 33.4705 | 15.4285 |

| DDTrans | 8.0043 | 174.9918 | 0.9836 | 0.8317 | 42.9410 | 17.0266 |

表2 内扫描情况下对比实验的定量结果

Tab.2 Comparison of quantitative results of different methods for interior scanning data

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| FBP | 488.4375 | 556.6830 | 0.6466 | 0.2275 | 7.5358 | 7.0234 |

| ATRACT | 62.3716 | 287.6859 | 0.8911 | 0.5542 | 25.8800 | 13.1452 |

| WCE | 37.0367 | 225.5691 | 0.8819 | 0.5915 | 32.9671 | 14.9137 |

| ES-UNet | 54.7751 | 226.6859 | 0.8906 | 0.8062 | 29.2464 | 14.8481 |

| OneNetDBP | 48.7271 | 219.1173 | 0.8279 | 0.7781 | 26.1069 | 15.2767 |

| DGAN | 29.6017 | 201.8265 | 0.9537 | 0.7769 | 33.4705 | 15.4285 |

| DDTrans | 8.0043 | 174.9918 | 0.9836 | 0.8317 | 42.9410 | 17.0266 |

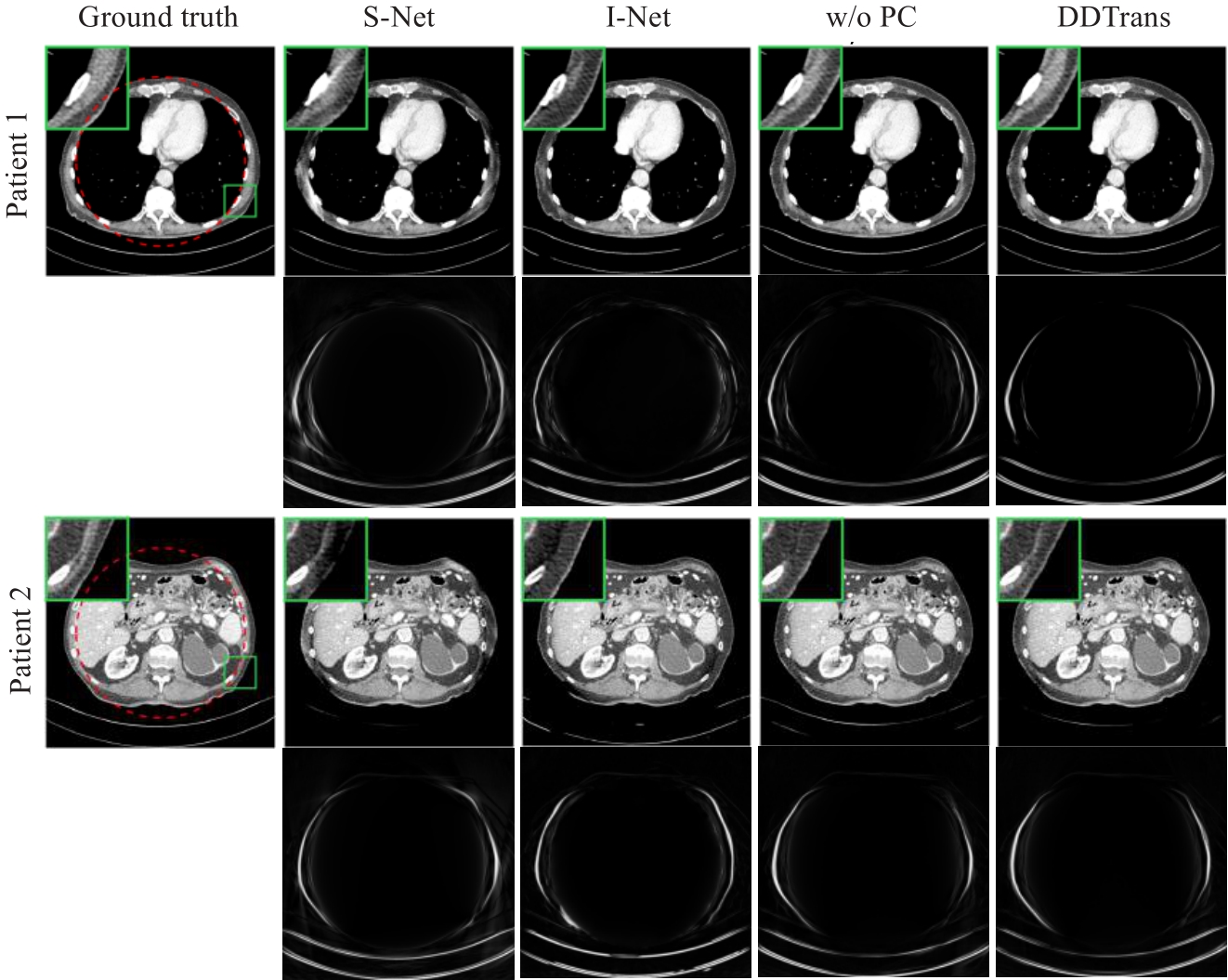

图6 消融实验结果

Fig.6 Ablation experiment results of single projection domain network (S-Net), single image domain network (I-Net), dual domain network without projection consistency constraint layer (w/o PC) and DDTrans. The display window is [-200, 200]HU.

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| S-Net | 6.5405 | 120.0671 | 0.9982 | 0.8898 | 49.0927 | 11.5285 |

| I-Net | 11.3366 | 130.1608 | 0.9965 | 0.9124 | 41.9385 | 10.7919 |

| w/o PC | 6.5004 | 117.1258 | 0.9989 | 0.9232 | 50.4997 | 11.7845 |

| DDTrans | 5.4079 | 116.9005 | 0.9991 | 0.9250 | 52.4910 | 12.0010 |

表3 消融实验结果的定量比较

Tab.3 Quantitative comparison of ablation experimental results

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| S-Net | 6.5405 | 120.0671 | 0.9982 | 0.8898 | 49.0927 | 11.5285 |

| I-Net | 11.3366 | 130.1608 | 0.9965 | 0.9124 | 41.9385 | 10.7919 |

| w/o PC | 6.5004 | 117.1258 | 0.9989 | 0.9232 | 50.4997 | 11.7845 |

| DDTrans | 5.4079 | 116.9005 | 0.9991 | 0.9250 | 52.4910 | 12.0010 |

图7 不同算法在肩部数据上的泛化能力对比

Fig.7 Comparison of the generalization capabilities of different algorithms on shoulder data. The head data display window is [-500, 500]HU. The second and fourth rows are absolute residual images of different algorithm results with respect to the ground truth.

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| FBP | 341.9528 | 460.3208 | 0.6594 | 0.5833 | 14.7162 | 9.5040 |

| ATRACT | 100.3245 | 261.8279 | 0.8597 | 0.7811 | 24.1792 | 13.2714 |

| WCE | 22.6755 | 187.2308 | 0.9611 | 0.8212 | 41.6179 | 16.1640 |

| ES-UNet | 36.4977 | 149.5005 | 0.9148 | 0.8647 | 40.7829 | 16.2713 |

| OneNetDBP | 108.2811 | 159.9267 | 0.8676 | 0.8098 | 23.5470 | 15.7454 |

| DGAN | 19.9203 | 174.9319 | 0.9800 | 0.8205 | 41.3990 | 15.4051 |

| DDTrans | 16.9099 | 144.0732 | 0.9897 | 0.8891 | 42.4004 | 16.7163 |

表4 不同算法泛化能力的定量比较

Tab.4 Quantitative comparison of generalization capabilities of different methods.

| Methods | RMSE | SSIM | PSNR | |||

|---|---|---|---|---|---|---|

| Inside FOV | Outside FOV | Inside FOV | Outside FOV | Inside FOV | Outside FOV | |

| FBP | 341.9528 | 460.3208 | 0.6594 | 0.5833 | 14.7162 | 9.5040 |

| ATRACT | 100.3245 | 261.8279 | 0.8597 | 0.7811 | 24.1792 | 13.2714 |

| WCE | 22.6755 | 187.2308 | 0.9611 | 0.8212 | 41.6179 | 16.1640 |

| ES-UNet | 36.4977 | 149.5005 | 0.9148 | 0.8647 | 40.7829 | 16.2713 |

| OneNetDBP | 108.2811 | 159.9267 | 0.8676 | 0.8098 | 23.5470 | 15.7454 |

| DGAN | 19.9203 | 174.9319 | 0.9800 | 0.8205 | 41.3990 | 15.4051 |

| DDTrans | 16.9099 | 144.0732 | 0.9897 | 0.8891 | 42.4004 | 16.7163 |

| Methods | FBP | ATRACT | WCE | ES-UNet | OneNetDBP | DGAN | DDTrans |

|---|---|---|---|---|---|---|---|

| Time-consumption (s) | 85.7 | 111.3 | 93.9 | 63.5 | 353.5 | 114.1 | 40.6 |

表5 不同算法重建526层图像所需时间对比

Tab.5 Comparison of time consumption of different methods for reconstructing 526 images

| Methods | FBP | ATRACT | WCE | ES-UNet | OneNetDBP | DGAN | DDTrans |

|---|---|---|---|---|---|---|---|

| Time-consumption (s) | 85.7 | 111.3 | 93.9 | 63.5 | 353.5 | 114.1 | 40.6 |

| 1 | 曾更生. 医学图像重建[M]. 北京: 高等教育出版社, 2010. |

| 2 | Fan FX, Kreher B, Keil H, et al. Fiducial marker recovery and detection from severely truncated data in navigation-assisted spine surgery[J]. Med Phys, 2022, 49(5): 2914-30. DOI: 10.1002/mp.15617 |

| 3 | Wang G, Yu HY, De Man B. An outlook on X-ray CT research and development[J]. Med Phys, 2008, 35(3): 1051-64. DOI: 10.1118/1.2836950 |

| 4 | Kudo H, Suzuki T, Rashed EA. Image reconstruction for sparse-view CT and interior CT-introduction to compressed sensing and differentiated backprojection[J]. Quant Imaging Med Surg, 2013, 3(3): 147-61. DOI: 10.3978/j.issn.2223-4292.2013.06.01 |

| 5 | Ohnesorge B, Flohr T, Schwarz K, et al. Efficient correction for CT image artifacts caused by objects extending outside the scan field of view[J]. Med Phys, 2000, 27(1): 39-46. DOI: 10.1118/1.598855 |

| 6 | Hsieh J, Chao E, Thibault J, et al. A novel reconstruction algorithm to extend the CT scan field-of-view[J]. Med Phys, 2004, 31(9): 2385-91. DOI: 10.1118/1.1776673 |

| 7 | Sourbelle K, Kachelriess M, Kalender WA. Reconstruction from truncated projections in CT using adaptive detruncation[J]. Eur Radiol, 2005, 15(5): 1008-14. DOI: 10.1007/s00330-004-2621-9 |

| 8 | Noo F, Clackdoyle R, Pack JD. A two-step Hilbert transform method for 2D image reconstruction[J]. Phys Med Biol, 2004, 49(17): 3903-23. DOI: 10.1088/0031-9155/49/17/006 |

| 9 | Kudo H, Courdurier M, Noo F, et al. Tiny a priori knowledge solves the interior problem in computed tomography[J]. Phys Med Biol, 2008, 53(9): 2207-31. DOI: 10.1088/0031-9155/53/9/001 |

| 10 | Courdurier M, Noo F, Defrise M, et al. Solving the interior problem of computed tomography using a priori knowledge[J]. Inverse Probl, 2008, 24(6): 065001. DOI: 10.1088/0266-5611/24/6/065001 |

| 11 | Coussat A, Rit S, Clackdoyle R, et al. Region-of-interest CT reconstruction using object extent and singular value decomposition[J]. IEEE Trans Radiat Plasma Med Sci, 2022, 6(5): 537-51. DOI: 10.1109/trpms.2021.3091288 |

| 12 | Xia Y, Hofmann H, Dennerlein F, et al. Towards clinical application of a Laplace operator-based region of interest reconstruction algorithm in C-arm CT[J]. IEEE Trans Med Imaging, 2014, 33(3): 593-606. DOI: 10.1109/tmi.2013.2291622 |

| 13 | Han WM, Yu HY, Wang G. A general total variation minimization theorem for compressed sensing based interior tomography[J]. J Biomed Imag, 2009, 2009: 21. DOI: 10.1155/2009/125871 |

| 14 | Yang JS, Yu HY, Jiang M, et al. High-order total variation minimization for interior tomography[J]. Inverse Probl, 2010, 26(3): 035013. DOI: 10.1088/0266-5611/26/3/035013 |

| 15 | Yu HY, Wang G. Compressed sensing based interior tomography[J]. Phys Med Biol, 2009, 54(9): 2791-805. DOI: 10.1088/0031-9155/54/9/014 |

| 16 | Han Y, Ye JC. One network to solve all ROIs: deep learning CT for any ROI using differentiated backprojection[J]. Med Phys, 2019, 46(12): e855-e872. DOI: 10.1002/mp.13631 |

| 17 | Li YS, Li K, Zhang CZ, et al. Learning to reconstruct computed tomography images directly from sinogram data under A variety of data acquisition conditions[J]. IEEE Trans Med Imaging, 2019, 38(10): 2469-81. DOI: 10.1109/tmi.2019.2910760 |

| 18 | Fournié É, Baer-Beck M, Stierstorfer K. CT field of view extension using combined channels extension and deep learning methods[J]. arXiv preprint, arXiv:1908.09529, 2019, |

| 19 | Huang YX, Preuhs A, Manhart M, et al. Data extrapolation from learned prior images for truncation correction in computed tomography[J]. IEEE Trans Med Imaging, 2021, 40(11): 3042-53. DOI: 10.1109/tmi.2021.3072568 |

| 20 | Shamshad F, Khan S, Zamir SW, et al. Transformers in medical imaging: a survey[J]. Med Image Anal, 2023, 88: 102802. DOI: 10.1016/j.media.2023.102802 |

| 21 | Guo Y, Chen J, Wang JD, et al. Closed-loop matters: dual regression networks for single image super-resolution[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 13-19, 2020. Seattle, WA, USA. IEEE, 2020: 5047-16. DOI: 10.1109/cvpr42600.2020.00545 |

| 22 | Ronchetti M. TorchRadon: fast differentiable routines for computed tomography[J]. arXiv preprint arXiv:2009.14788, 2020 |

| 23 | Zamir SW, Arora A, Khan S, et al. Restormer: efficient transformer for high-resolution image restoration[C]//2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 18-24, 2022. New Orleans, LA, USA. IEEE, 2022: 5278-39. DOI: 10.1109/cvpr52688.2022.00564 |

| 24 | AAPM. Low dose CT grand challenge[EB/OL]. , 2017. |

| 25 | Moen TR, Chen BY, Holmes DR 3rd, et al. Low-dose CT image and projection dataset[J]. Med Phys, 2021, 48(2): 902-11. DOI: 10.1002/mp.14594 |

| 26 | Paszke A, Gross S, Chintala S, et al. Automatic differentiation in PyTorch[C]. Proceedings of the 31st Conference on Neural Information Processing Systems. Long Beach, USA, 2017. |

| 27 | Cheung JP, Shugard E, Mistry N, et al. Evaluating the impact of extended field-of-view CT reconstructions on CT values and dosimetric accuracy for radiation therapy[J]. Med Phys, 2019, 46(2): 892-901. DOI: 10.1002/mp.13299 |

| 28 | Fonseca GP, Baer-Beck M, Fournie E, et al. Evaluation of novel AI-based extended field-of-view CT reconstructions[J]. Med Phys, 2021, 48(7): 3583-94. DOI: 10.1002/mp.14937 |

| 29 | Wallace N, Schaffer NE, Freedman BA, et al. Computer-assisted navigation in complex cervical spine surgery: tips and tricks[J]. J Spine Surg, 2020, 6(1): 136-44. DOI: 10.21037/jss.2019.11.13 |

| [1] | 龙楷兴, 翁丹仪, 耿 舰, 路艳蒙, 周志涛, 曹 蕾. 基于多模态多示例学习的免疫介导性肾小球疾病自动分类方法[J]. 南方医科大学学报, 2024, 44(3): 585-593. |

| [2] | 肖 慧, 方威扬, 林铭俊, 周振忠, 费洪文, 陈超敏. 基于两阶段分析的多尺度颈动脉斑块检测方法[J]. 南方医科大学学报, 2024, 44(2): 387-396. |

| [3] | 黄品瑜, 钟丽明, 郑楷宜, 陈泽立, 肖若琳, 全显跃, 阳 维. 多期相CT合成辅助的腹部多器官图像分割[J]. 南方医科大学学报, 2024, 44(1): 83-92. |

| [4] | 弥 佳, 周宇佳, 冯前进. 基于正交视角X线图像重建的3D/2D配准方法[J]. 南方医科大学学报, 2023, 43(9): 1636-1643. |

| [5] | 楚智钦, 屈耀铭, 钟 涛, 梁淑君, 温志波, 张 煜. 磁共振酰胺质子转移模态的胶质瘤IDH基因分型识别:基于深度学习的Dual-Aware框架[J]. 南方医科大学学报, 2023, 43(8): 1379-1387. |

| [6] | 于佳弘, 张昆鹏, 靳 爽, 苏 哲, 徐晓桐, 张 华. 弦图插值结合UNIT网络图像转换的CT金属伪影校正[J]. 南方医科大学学报, 2023, 43(7): 1214-1223. |

| [7] | 周 昊, 曾 栋, 边兆英, 马建华. 基于半监督网络的组织感知CT图像对比度的增强方法[J]. 南方医科大学学报, 2023, 43(6): 985-993. |

| [8] | 滕 琳, 王 斌, 冯前进. 头颈癌放疗计划剂量分布的预测方法:基于深度学习的算法[J]. 南方医科大学学报, 2023, 43(6): 1010-1016. |

| [9] | 吴雪扬, 张 煜, 张 华, 钟 涛. 基于注意力机制和多模态特征融合的猕猴脑磁共振图像全脑分割[J]. 南方医科大学学报, 2023, 43(12): 2118-2125. |

| [10] | 和法伟, 王永波, 陶 熙, 朱曼曼, 洪梓璇, 边兆英, 马建华. 基于噪声水平估计的低剂量螺旋CT投影数据恢复[J]. 南方医科大学学报, 2022, 42(6): 849-859. |

| [11] | 王 蕾, 王永波, 边兆英, 马建华, 黄 静. 基于非局部能谱相似特征的基物质分解方法用于双能CT图像去噪[J]. 南方医科大学学报, 2022, 42(5): 724-732. |

| [12] | 符 帅, 李明强, 边兆英, 马建华. 低剂量CT图像重建算法对脑出血检测性能的影响[J]. 南方医科大学学报, 2022, 42(2): 223-231. |

| [13] | 张昆鹏, 于佳弘, 靳 爽, 苏 哲, 徐晓桐, 张 华. 基于顺序嵌入结合关联嵌入的呼吸运动预测[J]. 南方医科大学学报, 2022, 42(12): 1858-1866. |

| [14] | 斯文彬, 冯衍秋. 定量磁化率成像中磁化率重建伪影的清除:基于多通道输入的卷积神经网络方法[J]. 南方医科大学学报, 2022, 42(12): 1799-1806. |

| [15] | 黄进红, 周根娇, 喻泽峰, 胡文玉. 基于复值损失函数的并行MRI的深度重建[J]. 南方医科大学学报, 2022, 42(12): 1755-1764. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||